Retrieval-Augmented Generation (RAG)

RAG enriches an LLM’s responses by fetching and integrating relevant external knowledge at query time.

Why It Matters

- Cuts AI “hallucinations” by anchoring outputs in factual sources

- Surfaces live, up-to-date information without retraining

Use Cases

- Spire Support Agent

- Internal Knowledge Chatbot

Tech Stack Tags

Azure GPT-4.1 · LangGraph · Pinecone · ChromaLarge Language Models (LLM)

Transformer-based neural networks trained on massive text corpora to generate versatile, human-quality text.

Why It Matters

- Automates summarization, translation, code generation and more

- Drives productivity by handling diverse NLP tasks without custom training

Use Cases

- Spire Support Agent (intent detection & auto-responses)

- Audience Intelligence (targeted campaign generation)

Tech Stack Tags

Azure GPT-4.1 · GPT-4 · Claude · LLaMA · Azure OpenAISmall Language Models (SLM)

Compact transformer models optimized for speed, cost-efficiency, and on-device deployment.

Why It Matters

- Delivers millisecond-level responses for real-time apps

- Reduces infrastructure and energy costs

Use Cases

- Retail FAQ Chatbots

- E-commerce Content Tagging

Tech Stack Tags

Distilled GPT · LoRA-tuned LLaMA · Hugging Face TransformersFine-Tuning

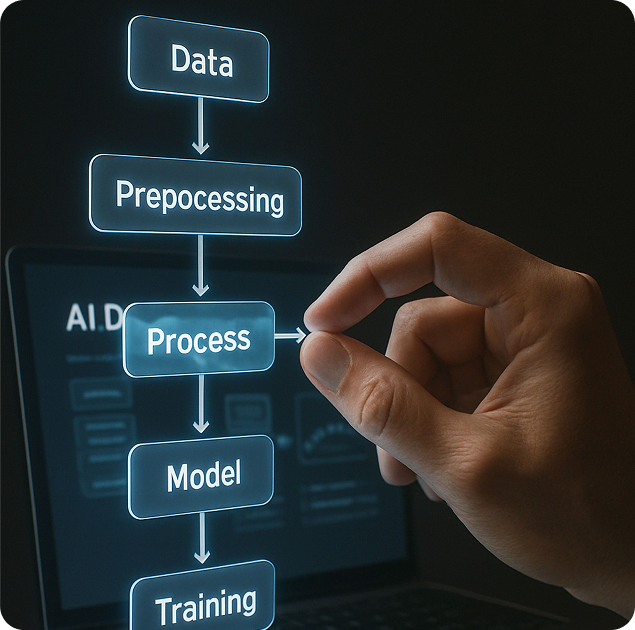

Adapts a pretrained LLM on your proprietary data to learn domain terminology and workflows.

Why It Matters

- Delivers domain-accurate outputs with minimal data

- Cuts development time and cost versus training from scratch

Use Cases

- Insurance Underwriting (30% faster approvals)

- Legal Regulation Extraction

Tech Stack Tags

LoRA · Custom Data PipelinesPrompt Engineering

Crafts structured instructions and examples to steer an LLM toward desired format, tone, and accuracy.

Why It Matters

- Improves output without any retraining

- Ensures consistent, high-quality results

Use Cases

- AI-Powered Test Generation

- Automated Release Notes

Tech Stack Tags

OpenAI API · Prompt TemplatesEmbeddings

Numeric vector representations that map semantically similar data closer together in high-dimensional space.

Why It Matters

- Enables semantic search beyond keyword matching

- Powers personalized recommendations

Use Cases

- Legal Search Engine

- E-commerce Recommendation Engine

Tech Stack Tags

Pinecone · Chroma · OpenAI EmbeddingsAgents / Agentic Workflows

Autonomous AI orchestrations that plan, execute, and self-correct multi-step processes.

Why It Matters

- Automates complex end-to-end tasks

- Adapts dynamically to intermediate results

Use Cases

- Spire Support Agent Lifecycle

- SDLC Copilot (spec → code → tests → PR)

Tech Stack Tags

LangGraph · LangChain · Custom OrchestratorModel Distillation

Compresses a large “teacher” model into a smaller “student” model that retains most capabilities.

Why It Matters

- Speeds inference by up to 3×

- Cuts compute costs in half

Use Cases

- Mobile On-Device Chatbot

- Automated Content Moderation

Tech Stack Tags

Distillation Libraries · TensorFlow LiteVector Database

Specialized stores for indexing and querying vector embeddings instead of text keywords.

Why It Matters

- Sub-millisecond semantic lookups at scale

- Core component for RAG and similar retrieval systems

Use Cases

- Geoscience Natural-Language Queries

- Customer 360 Profile Search

Tech Stack Tags

Pinecone · Chroma · WeaviateGuardrails & Safety

Layers of rules, filters, and monitors that enforce ethical, compliant, and policy-aligned AI outputs.

Why It Matters

- Prevents harmful or biased responses

- Ensures regulatory and privacy compliance

Use Cases

- Financial Chatbot Compliance

- Medical Assistant Safety Checks

Tech Stack Tags

Regex Redaction · Schema FiltersAI Hallucinations

When an LLM confidently fabricates false or misleading information not grounded in data.

Why It Matters

- Undermines trust and reliability

- Carries a high risk in critical domains

Use Cases

- Spire RAG + Schema Validation

- Human-in-the-Loop Escalation Systems

Tech Stack Tags

RAG Layers · Confidence ScoringMulti-modal AI

AI that ingests and reasons over text, images, audio, and video for richer, more context-aware outputs.

Why It Matters

- Delivers a holistic understanding of complex inputs

- Enables novel interactions like video Q&A

Use Cases

- Invoice Image + Text Processing

- Video Ad Voice Cloning

Tech Stack Tags

CLIP · Whisper · Vision-Language TransformersOpen-Source vs Closed-Source Models

Open models (e.g., LLaMA, Falcon) provide public code and weights; closed models (e.g., GPT-4, Claude) are proprietary.

Why It Matters

- Balances flexibility, performance, and cost

- Avoids vendor lock-in with model-agnostic deployment

Use Cases

- Seamless swap between Azure OpenAI & LLaMA

- Enterprise AI Platform best-fit model selection

Tech Stack Tags

ONNX · Triton · OpenAI APIsDomain-Specific LLMs

Models pretrained or fine-tuned on industry data (legal, medical, finance) to master specialized terminology.

Why It Matters

- Delivers higher accuracy on niche tasks

- Ensures out-of-the-box compliance with industry standards

Use Cases

- U.S. Licensing Research Bot

- Financial Invoice Reconciliation

Tech Stack Tags

Custom Fine-Tuning · Domain CorporaRLHF (Reinforcement Learning with Human Feedback)

Aligns model behavior to human preferences by training on a reward model derived from curated feedback.

Why It Matters

- Guides models toward desired tone and ethics

- Enables continuous post-deployment refinement

Use Cases

- Empathy-tuned Support Agent

- Brand-Voice Content Generation

Tech Stack Tags

Reward Modeling · PPO · OpenAI RLHF ToolkitLatency & Inference Optimization

Techniques—quantization, caching, pruning—that minimize AI response times and compute costs.

Why It Matters

- Delivers sub-second replies for real-time systems

- Reduces GPU/CPU usage and overall expenses

Use Cases

- 10 s Ticket Processing (Spire Agent)

- 2× Faster High-Traffic Chatbots

Tech Stack Tags

ONNX Runtime · TensorRT · Quantization Libraries